For our recent flooding project, we devised a new means of user experience testing that could be carried out under COVID-19 restrictions. In Part 1 of this blog we described how we set up and carried out remote user testing.

Once the flooding user testing sessions were complete, it was time to start analysing the feedback from the videos. We watched the videos and started to pull out insights from what participants were saying or doing during the session.

The next step was to understand what the key insights were, and common issues encountered by most users. A key insight could be something that the participant found challenging or confusing. After gathering key insights from all participants, we could then focus on them and decide if and how we make improvements.

Analysing the user testing feedback

We set up a workshop attended by ourselves (UX designers), the product owner, business analyst, content designer and developer on the project. This way, we’d each get an understanding of the issues based on the feedback. During the workshop we discussed the key insights and common themes and then worked out which we felt we needed to improve.

We needed to work out the priorities to fix so we used the voting functionality in Miro to each vote for the issues we felt were the most important. This made it a fair analysis, as we could each talk through and explain our reasons why.

Then, we needed to prioritise the highest voted insights into an order for what would have the highest user value to solve first. We ended up with 5 key insights, for the ‘report a flood’ online form, and from these we came up with our prioritised recommendations to improve the form.

Recommendations to improve the form

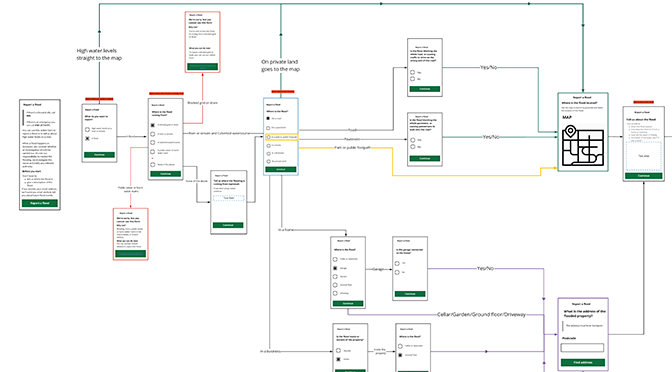

Most of the insights were centred around how participants used the map, such as not understanding what they had to do to make a report (click on an area on the map to bring up the ‘report a flood’ button) and that they weren’t aware that they had to click the magnifying glass in the search bar to enter the street they selected.

So, our recommendations for improvements included providing clear instructions for how to use the map and to improve the map functionality, so that when the address appears in the search bar, the user is simultaneously taken to the location without having to click anything.

These solutions, as well as the others prioritised from the key insights, have been created into story cards to go in the backlog for this project.

Improving our process for conducting unmoderated remote user testing

As this was our first attempt at unmoderated remote user testing, we knew that this would be a learning experience and that we’d need to make improvements to the process. We wanted to gather insights for how well participants understood the instructions we provided, as well as how they conducted the sessions by themselves.

For me personally, I think not so many details on one page…

Key insights to making improvements to the instructions for setting up Lookback:

- the instructions to guide the participant through the process are lengthy – we need to make them more concise

- we need to emphasise how important it is for participants to turn off notifications on their device, so that personal notifications such as text messages or emails don’t get recorded

- we need to make the instructions on how to do this clearer

- some participants struggled with content being in both the email and the instructions; they found it confusing going back and forth between emails, the Lookback app and the instructions

- one participant needed some assistance to get to the flooding form itself to start the task. We need to make the process as easy as possible, so participants don’t need help from someone who can help guide them through the process

The biggest pain was internet connection dropping out and having to reinstall the software and start again. The testing only took a few minutes.

Although there is a positive here, in that the session didn’t take them long to complete, we need to be mindful of users’ internet connections, which may affect how quick and simple it is to install the Lookback app, as this could cause issues for some users and may take them a long time to complete. We need to ensure that these sessions don’t take up too much of their spare time as they are completing these tasks in their own time to help us.

More positives

The videos demonstrated that most participants understood the part of the instructions which encouraged them to speak aloud their thought process as they completed the task. This really helped when we watched the videos, as we could better understand the reasoning behind their actions, as they talked us through it.

Some participants found setting up the session, including installing the Lookback app, quite straightforward and were able to do this without any assistance. This is great feedback for our first attempt, but we are mindful that not everyone finds this process straightforward and we are thinking about how we can make this process easier for those who found it difficult.

Our next steps will be to analyse all the feedback on how participants found setting up the session, to fully understand how we can make improvements for next time. But, for our first attempt at conducting unmoderated remote user testing, we can take away a lot of positives as well as some learnings for how we can improve this and make this process even better for people